- Published on

System Design: Cache

- Authors

- Name

- Full Stack Engineer

- @fse_pro

|   |

|---|

Table of Contents

- Introduction

- What is Cache

- Importance of Caching

- Caching Strategies

- Use Cases

- Caching Best Practices

- Conclusion

- Resources

Introduction

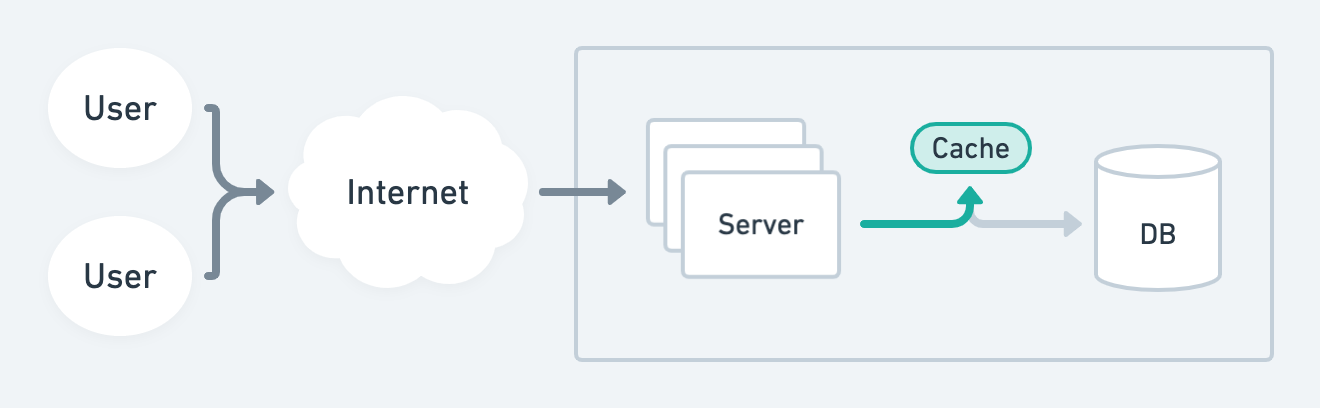

As web applications grow in complexity and user traffic, reducing latency and optimizing response times become critical. Caching is a technique that stores frequently accessed data in a temporary storage location to reduce the time taken to fetch the same data repeatedly from the original source.

What is Cache

A cache is a temporary storage location that holds a copy of frequently accessed data or computed results. When a user requests specific data, the system checks the cache first. If the data is found in the cache, it is retrieved faster, avoiding the need to fetch it from the original source, such as a database or external API.

Importance of Caching

Caching offers several benefits in system design:

Improved Performance: Caching reduces response times, as data is readily available in the cache, avoiding the need for expensive computations or database queries.

Reduced Latency: By serving data from the cache, latency is reduced, leading to a better user experience.

Scalability: Caching helps handle increased user traffic by offloading the load from the backend systems.

Cost Savings: Caching reduces the load on backend resources, optimizing infrastructure costs.

Caching Strategies

There are various caching strategies to consider when designing a caching system:

1. In-Memory Caching

In-memory caching stores data in memory, which is the fastest accessible storage in a system. It is suitable for frequently accessed data that doesn't change frequently. Common tools for in-memory caching include Redis and Memcached.

2. Distributed Caching

Distributed caching spreads cached data across multiple nodes, making it accessible from any node in the system. It helps ensure data availability even if one node fails.

3. Write-Through and Write-Back Caching

Write-Through Caching: Write-through caching writes data to the cache and the underlying data store simultaneously. It ensures data consistency but may lead to additional write latency.

Write-Back Caching: Write-back caching writes data only to the cache first, and then asynchronously writes it to the underlying data store. It provides low-latency writes but may lead to temporary data inconsistency.

4. Cache Invalidation

Cache invalidation ensures that cached data is up-to-date and accurate. There are several cache invalidation strategies, such as time-based invalidation, event-based invalidation, and manual invalidation.

5. FIFO Cache

FIFO (First-In, First-Out) cache is a caching strategy where the oldest items are evicted first when the cache reaches its capacity.

6. LRU Cache

LRU (Least Recently Used) cache is a caching strategy where the least recently accessed items are evicted first when the cache reaches its capacity.

7. LFU Cache

LFU (Least Frequently Used) cache is a caching strategy where the least frequently accessed items are evicted first when the cache reaches its capacity.

Use Cases

Caching is useful in various scenarios:

Content Caching: Storing frequently accessed static content like images, CSS, and JavaScript files in the cache to reduce load times.

Database Query Results: Caching the results of expensive database queries to avoid repeated computation.

API Responses: Caching responses from external APIs to reduce the response time for subsequent requests.

Caching Best Practices

To effectively implement caching, consider the following best practices:

Identify Cacheable Data: Determine which data can be cached and which data needs to be fetched from the original source every time.

Set Appropriate Time-to-Live (TTL): Define the time for which data should be cached before it needs to be refreshed.

Monitor Cache Health: Regularly monitor cache usage and performance to ensure it's serving its purpose effectively.

Conclusion

Caching is a critical component in system design that significantly improves performance and scalability. By implementing caching strategies such as in-memory caching, distributed caching, write-through, and write-back caching, cache invalidation, and using FIFO, LRU, and LFU caches, applications can deliver faster response times and a better user experience.